#1: The Boat, Kandinsky 7.0 and ChatGPT 42.0, or Value–Meaning Journeys

Truth, once expressed in form (written, spoken, etc.), ceases to be truth — including what I write... the usual exceptions are metaphors, parables, fairy tales, proverbs... music.

After reading this training article, the reader will:

- do some mental gymnastics which may, perhaps, increase awareness and thereby bring more freedom to one’s thinking and, as a result, to one’s life;

- it’s worth periodically rereading this training article, taking notes, trying to grasp the meanings laid out and the links between them.

…and will also have an idea:

- of a simple model of society;

- of mental models;

- of the basic laws of society;

- of AI and its impact on the future;

- of whether it’s possible to create strong AI using neural networks;

- of the most important question without whose solution our prospects are, sadly, quite foggy.

From the Author

This training article is intentionally written in a “ragged tempo,” with numerous open questions, a great many explicit and hidden meanings and interconnections, and sharp transitions. I assume it’s possible to “get lost” from meaning overload. After overload and a rest, try again. Those with experience working with koans might approach this piece precisely as a koan, and reading it as working with a koan. If you have questions, my contacts are at the bottom.

Lecture — presentation:

Like everyone, you are born in chains. Born into a prison you can’t smell or touch. A prison for your mind.

Context of this article

Obviously, AI has “arrived,” at least AI models have. The Age of AI Has Begun, as Bill Gates argued in his 03/2023 piece, where he describes his vision and expresses hopes of solving humanity’s old and new problems with “new tools.” There’s no shortage of articles and other materials on the topic. Since I work in the field and have my own view, I wrote part of this article in 03–05/2023. For personal reasons, I’m finishing and publishing it only now. Beyond AI, the article raises what seems to me the main question, which I’ll develop in subsequent publications. I also talk about “future scenarios,” so I think that 10–12 months after writing, it’ll be clearer whether my reflections were mistaken.

The errors of strong minds are frightening precisely because they become the thoughts of a multitude.N. G. Chernyshevsky

Written: 30.05.2023; edited: 26.05.2024.

Introduction

All my articles are numbered and the index number matters. My pieces raise philosophical–practical questions and are meant for thoughtful, unhurried reading. The goal is to increase awareness, to prompt reflection: I likely raise questions more than I answer them. The article “#0: A Metaphorical Autobiography, or the Professor’s Bunker Stories” is the intro, about the author.

Why I’m writing this and why now

I wanted to express my thoughts this way, and I hope it’s interesting and/or useful to someone.

Millions of laypeople and “been-around” folks have been impressed by AI models for years now, with growing amazement and anticipation (some perhaps with caution) as they await the next versions of well-known AI products. Many competent and/or famous people urgently proposed a 6-month pause in AI development.

Some colleagues in the “trade” offer their own view of how the results of their and our collective work affect society (e.g., OpenAI’s article). A Facebook post by an experienced data engineer sharing impressions of that article caught my attention.

He was extremely surprised by how things are unfolding and recalled his 2015 taxi conversation in Tbilisi, asking the driver what he would do when soon cars drive themselves. What surprised him was that AI tech would first affect high-paid jobs.

I find it ironic and worthy of deeper awareness that large numbers of smart, well-paid people worldwide are working on something whose consequences they themselves don’t understand. Into this context neatly fits the notorious OpenAI scandal firing CEO Sam Altman and his return: it’s ironic that people who can’t calculate the consequences of their own decisions within a single company set out to “make the world better by creating strong AI.” It’s also apt to recall Elon Musk’s “disavowal” of his “brainchild” and the ironic becoming of OpenAI not all that “Open.” This is a huge contrast with the past: the cobbler knew that if he invested time and energy he’d make shoes, etc. One could compare our activity to experimental scientists, but then the scale of resources invested (money, people, etc.) is enormous compared to lone scientists/groups of the past, given that we don’t grasp the consequences of the end product. That’s what I want to emphasize here. I also want to raise the question I consider the most important.

Detailed study of separate organs unteaches us to understand the life of the whole organism.V. O. Klyuchevsky

What I want to share

Through metaphors I’ll present a simple mental model you can use to model socio-economic dynamics.

For whom I’m writing

I think my articles may interest “architects,” people who operate meta-mental (and more complex) models. Also, perhaps, people not used to employing different mental models to parse ongoing processes.

What value you might extract

Perhaps you’ll learn another way to think about reality. Accordingly, you may see other causal links.

About the author

I’ll share a few facts that shaped my mental models.

Basis: roughly speaking, every “3 months to half-year I moved and adapted to another society/country” (first unconsciously, then consciously). For me it’s not a given: health, clean air, water, food, electricity, a place to sleep (sleep in silence is extra), a quiet place to be alone, peace, loved ones; clothing, etc. — those are already extras. I’ve worn many social roles. I’ve practiced various spiritual practices. I’ve interacted with people from different social strata. I’m also a mathematician and have loved numbers since childhood.

Places lived (more than 2 months): Ukrainian SSR: Mykolaiv, Komsomolsk-on-Dnieper, Beryslav; Ukraine: Mykolaiv, Kramatorsk, Komsomolsk-on-Dnieper, Beryslav; Israel: Kibbutz Yagur, Nesher, Haifa; Switzerland: Lugano; France: Rennes; Greece: Athens; Germany: Berlin. I’ve been to many places.

PhD, topic: Spectral Multi-modal Methods for Data Analysis; advisor: Prof. Michael M. Bronstein.

Bon voyage, Captain!

The ship-control metaphor is close to me because I had a small experience piloting a fishing vessel in the Mediterranean. I’ll extend that metaphor in the context of governing/modeling society/socio-economic systems. Imagine all humanity sailing on a ship. There are two helmsmen: the Collective Conscious and the Collective Unconscious. But that’s less interesting: imagine that you, my reader, are also the captain — of your own vessel (Fig. 2), which in some way affects the voyage of the large ship in Fig. 1.

Let’s first outline the laws and rules of motion. For easier memorization the laws are phrased simply. I formulated them for myself over a lifetime, and they reflect my personal experience, knowledge, and observations. Please use anything I “teach” reasonably and for good.

I hope it was interesting, reader, to spend a bit of your precious life with “me” reading this piece.

0. The “Divine Mystery” Law

There are events and concepts that fit no law… except the Divine Mystery.Occam’s Razor

Don’t explain complexly if a simple explanation suffices. If you can explain without resorting to “conspiracy theories,” use that explanation. Which, however, does not mean a “conspiracy theory” can’t be true.1. The Law of “Simplification” or “Parameterization”

Any phenomenon can be simplified into its components and then “worked with.” In mathematical terms: it can be parameterized by finding a “projection” onto a coordinate system suitable for the task. After that, deal with this limited number of parameters.

- Animate: any person is a “cosmos” and very complex; it’s easier to group people into “classes” using task-relevant features (5PFQ, XYZα, Archetypes, Temperament, Zodiac signs, Socionics, Human Design, IQ, 9 Enneagram types, Marketing personas, Castes 1, Castes 2, Metaphysical Classes etc.).

- Inanimate: the phenomenon can be modeled as a Riemannian manifold — an object with a “tangent plane with coordinates” at each point; then “project” everything to that “plane” and apply standard mathematical tools for analysis and manipulation there (“surface” of one image, the space of all images, a 3D object, the space of 3D objects, a graph, the space of all comments, all texts, all “artist styles,” all “music styles,” all songs, all audio signals produced by an artist/instrument, the space of all smooth functions, the space of all operators, etc.).

Rationale: Riemannian geometry and differential geometry, orthogonal functions: Fourier series, spectral decomposition etc.; for example, the existence of psychology, which can find patterns and “catalog” personalities; the existence and “successful” use of “scripts” by scammers; the existence and use of pickup for seduction, etc.

We can “simplify”/“parameterize”: but why does that allow us to infer anything about people? Are humans predictable?

2. The “Matrix” Law, or “Oblomov-lite”

We often think and therefore act “automatically,” “by template,” executing certain “scripts.” Like a car, we can automatically switch among various, possibly very complex “scripts”/“templates” to solve familiar tasks without realizing it.Any action or thought beyond existing experience typically triggers anxiety and doubt and takes more effort than the familiar. This requires discipline and a certain habit not everyone has developed.

Mindfulness is a tool for overcoming “automation” and “programming” (a bit about mindfulness here, and free training here).

- Promoted by: any monotonous activity, “routine,” certain jobs: cashier, assembly-line worker, etc.

- Not promoted by: activities requiring critical thinking, influence over people (sales, politics, marketing, fraud), sabotage and espionage, creative pursuits, investing, etc.

Rationale: automatic thoughts, life scripts, a short article with links to studies on automaticity (e.g., this or this), “Thinking, Fast and Slow”, G. Gurdjieff etc.

I have my personal observation on what percentage of people or tasks we “templated” or “automated,” which I use for myself; I don’t know of studies on this, so I won’t cite numbers; I’ll just say I use a Pareto-like rule.

Thinking is hard; that’s why most people judge.C. G. Jung

So people are in some measure “predictable,” “automated,” and being mindful breaks this Matrix Law. Mindfulness is “trending”: many meditate, do yoga, various trainings, books, go to psychologists (countless), etc. So this law isn’t a law and hardly works?

3. The “Echo in the Mountains” Law

It’s a mistake to think of mindfulness as a “point” action — “being in the moment” — because mindfulness has a “shape,” a “radius.” The “radius of mindfulness” can be “defined” as the “distance over which we hear the echoes of our actions, of ‘ourselves’… and, in a more advanced sense, of our thoughts”: like hearing your voice echo in the mountains; some are deaf, some hear a couple of echoes, some perhaps hear very long “reflections” from the cliffs.Alternatively, the “radius of mindfulness” is how deeply we grasp causes and effects and ourselves/our influence in this space in each moment and in different contexts.

Got it: what many “train,” where “they go” in practice, is often mistaken and lets them “with a clear conscience” do what they would have done anyway. But what about practices — aren’t they supposed to help people understand this and more?

4. The “Controlled Opposition/Right-Wing” Law

Any practice (e.g., vipassana, Wim Hof breathing, silence retreats, satori, reality testing, psychoanalysis of various schools, working with koans, pills/mushrooms/herbs, kinds of yoga, therapies, etc.) is a tool. Sometimes a very powerful one.

Every tool is created to solve certain tasks. Many practices/tools imply preserving the “status quo” while practicing them; that is, they’re designed specifically so certain things don’t change — first and foremost in the practitioner’s thinking.

People from “structures,” sabotage units, political technologists, etc., may find this easier to grasp: to control those who disagree with any system (and there will always be such people by the “Shooting Stick” law (existence confirmation) and the “Bell Curve” law (quantifies such people)), it’s more profitable to “lead” these movements, control the top; finance, watch, and control all their actions: protests, Telegram channels, etc.

So one way to preserve the “status quo,” especially in thinking, is to promote and encourage “convenient practices, meditations, notions of mindfulness, yoga…”? What other techniques are used?

5. The “Girl in the Red Dress” Law

Those with children know you can redirect their attention so they “switch” from the “undesirable.” It’s similar with us: an “agenda” is created so we don’t pay attention to what we “shouldn’t”; as a result, the “wrong” questions and actions won’t come to mind.

6. The “Driven Horse” Law

Our socio-economic system is built, as a rule, so that we have as little time as possible to “look around,” so that “unneeded thoughts and questions” don’t arise.

7. The “Attention” Law

Where your attention is, there are you.

We mistakenly think money, time, etc., are the main resource. The most important human resource is likely attention (here I should pivot to “soul” — the basis of everything — but I’ll stay in secular rhetoric). Next is energy. That’s why “influencers” and the like make money. Where people’s attention clusters, their energy is, which, in certain contexts, converts into money, for example.

This is probably the second most important law.

Why is attention control important?

8. The “Pink Elephant” Law

A person’s “world” is limited by their experience in the broad sense. What has never “entered” a person’s attention doesn’t exist for them in consciousness.

If someone has never heard or seen “pink elephants,” they can’t request a “pink elephant” for their “army.”

Any change begins with a thought about an idea… and giving it attention.

9. Einstein’s Law of Problem-Solving and Systems Analysis

“We cannot solve our problems with the same level of thinking that created them. We must rise to the next level.”Albert Einstein

The same holds for systems analysis: you can’t analyze what you’re part of; first you must cease being a participant. That’s why we invite outsiders: to resolve relationship problems, conflicts, a personal therapist, etc., who are not participants in this “dynamic system”/are “outside observers.”

10. “It Takes One to Know One”

Projection in psychology. “Good people” usually can’t imagine the full sophistication of “bad people’s” thoughts and intentions; they judge by themselves, based on their own experience.

11. The “Dao” Law

When “soft” appears, “hard” appears,

when “light” appears, “heavy” appears…

One cannot exist without the other.

12. The “Titanic” Law

Even if you “see an iceberg ahead,” there is “inertia” that prevents an instant “stop.” Inertia exists both in a single person (e.g., a longtime heavy smoker usually can’t quit instantly… or instantly adopt a new habit — “change their ship’s course”) and in a large socio-economic system.

13. The “Prophet” Law or “Disruptive Innovation”

If you do something that changes the system (primarily people’s thinking or you shift attention to what “shouldn’t be”), you start having “problems.” The “problems” grow with the “threat level to the system” (e.g., cancellation, discrediting, intimidation of you and/or your loved ones, depriving you of your livelihood, “strange coincidences,” death, and anything else “opponents/beneficiaries of the system” can imagine).

Conversely, if you’re “awarded” for your activity, as a rule, at minimum you aren’t hindering “the system.” So the so-called “disruptive innovation” is largely a cultivated “illusion,” something that makes the system more resilient and gives it “antifragility” (re: “antifragility” — I’m not vouching; needs deeper thought).

14. The “People Don’t Change” Law

People are people. They love money — always have… Humanity loves money, no matter what it’s made of: leather, paper, bronze, or gold. They’re frivolous… and mercy sometimes knocks on their hearts… ordinary people… on the whole, like before… only “the housing question” has corrupted them… Quote by Woland from

People do change, but the “closer to the core,” the harder and slower the change.

15. The “Stick That Shoots Once a Year” Law

Any event, even extremely unlikely (e.g., winning the lottery), will “almost surely” occur with enough “tries.”

The number of events is usually described by Law 16, the “Bell Curve” (though there are special methods to compute the expectation of “rare quantities”).

Rationale: law of large numbers and expected value of the binomial distribution (for sufficiently large n), Infinite Monkey Theorem.

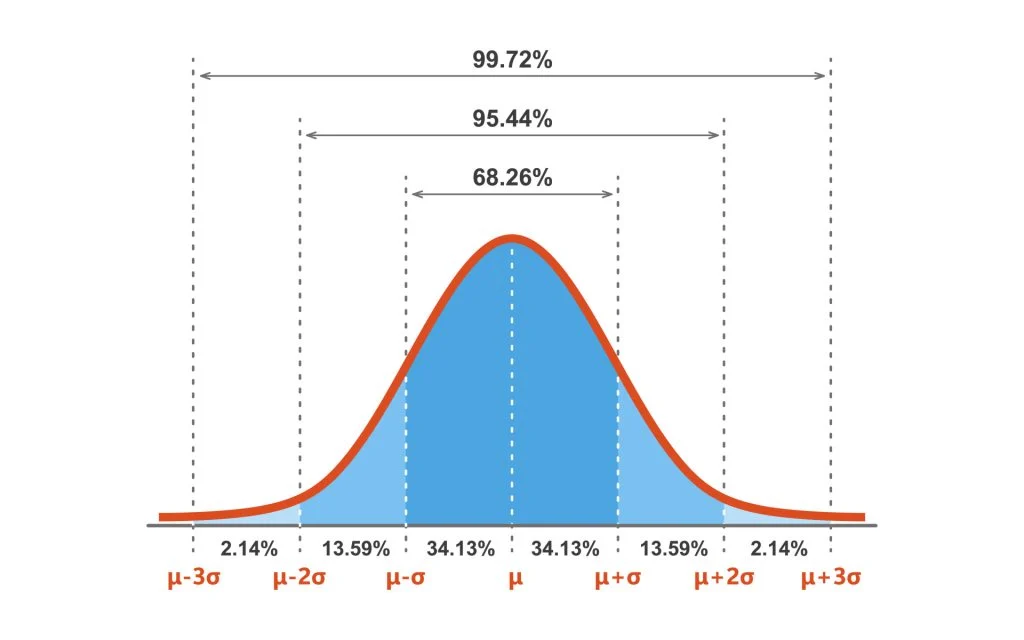

16. The “Bell Curve” Law

If we look at the distribution of (most) things or events, it looks like a “bell,” with its own mean and variance (e.g., human height distribution, inter-eye distance, number of civilian casualties in an assault, number of KIA/WIA given artillery quantity, IQ distribution, horse weight, etc.).

Rationale: of course I mean the normal distribution/Student’s t-distribution and central limit theorems.

17. The “80/20” Law

“20% of efforts yield 80% of results, and the remaining 80% of efforts yield only 20% of results.”

What isn’t covered by the normal distribution is often covered by the Pareto distribution (e.g., wealth distribution, word frequencies, etc.).

Rationale: Pareto distribution, Pareto principle, Pareto curve

18. The “Large Volume” Law

Any, even tiny, change in a large-volume system leads to significant absolute changes.

Suppose a pipeline has a small hole. If it pumps 10 liters/day, losses are small. Pumping millions of liters, losses are substantial.

Another example. Suppose a Starbucks in California learns via an A/B test that swapping cappuccino and Americano positions on the menu increases profit by 0.03%. If global revenue is $32B, rolling this change out could add $9.6M.

19. The “Terminator” Law

In any mechanical/“templated” activity, machines will beat living beings (e.g., humans).

20. The “Boiled Frog” Law (special case: the “Overton Window”)

Gradual changes are accepted more easily than abrupt ones; the sign of change (“+” or “−”) doesn’t matter. Often changes may go unnoticed.

21. The “Weak Link” or “Bottleneck” Law

“Where it’s thin, it tears.”

In any closed system (manufacturing, sales, any “funnels,” a car, an army, logistics, etc.) there’s a bottleneck (BN) that limits flow; any system’s functioning can be seen as a flow and transformation of resources. Finding and removing the BN optimally improves the system.

A system is as strong as its weakest link.

Note: the theory of constraints/bottlenecks only partially applies to a person or, more generally, to Socio-Economic Systems (SES). SES dynamics have “funnels”/“magnets”; often there are “spirals” and “fractals of spirals and funnels.”

Rationale: Theory of Constraints

22. The “Whirlpool” Law

In a person’s field of action there can be “whirlpools.” Imagine your ship traveling where the “current(s)” are strong due to “whirlpools.” This metaphor explains why it’s so hard — sometimes almost impossible — to exit the context in some cases (e.g., when people inhabit a social role, “get pulled into an ideology/sect/pogroms,” etc.).

I’ll write more about this in a piece on complex mental models; it will be clear why BN theory doesn’t apply.

23. The “Flock Magnet” Law

It’s hard to go against everyone or most, to resist the consensus.

24. The “Stairs in the (Chinese) Cinema” Law

Suppose we’re in a cinema row. If those in front bring cushions, to see we’ll also need to sit on something to compensate for height. And so on: the higher those in front sit, the higher we must sit.

Many processes mirror this: using AI/digitalization/ads in business; drones/satellites/EW/… in war, etc. If you don’t use the “stair,” you won’t “see” (you’ll be disadvantaged).

I first heard this from a Chinese woman sharing a parable.

25. The “Wait, You Can Do That?!” Law

A new, better way to achieve prior (or better) results is quickly adopted by other “players”; closely tied to Law 24 because otherwise you’ll lose, doing things “the old way.”

Examples: first adoption of the subscription business model (now standard); drones in war, etc.

26…41. Law: …

Laws not mentioned. You can suggest the missing ones in any convenient feedback form.

42. The ॐ Law

This is the most complex law; it was told to me. I didn’t understand it until I did an extreme spiritual practice.

I doubt most will get it, so I’ll limit it to:

- the living is a source of “waves”: our brain usually finds plausible explanations for what has already been “decided” at the “wave” level;

- a person is a “membrane”: receives and transmits electromagnetic waves; all people (the living) are connected and influence each other; through certain wave signals you can influence, “synchronize,” and “conduct” people.

Rationale: the closest (applied) thing I found is in this video

The “Socrates” Paradox

“I know that I know nothing.”(attributed to) Socrates

“The best knowledge is not knowing that you know anything.”Laozi

“99.99%” of people think they “know” more than “Socrates,” paradoxically including me.

An example of “incomplete” thinking:

- it’s obvious what a man/woman is…

- ads/movies/cartoons/propaganda don’t influence me

- scammers won’t fool me — that won’t happen to me

- it’s clear what money is — look it up

- …

Laws can and should be combined.

The journey begins

Let’s inspect our ship in Fig. 1.

We have a very large multi-deck ship. Some work in the “engine room” or kitchen, others ensure electronics run smoothly, someone parties carefree on the deck, etc.; there are many rooms.

We know some of the ship’s previous routes (note the info is incomplete and you should correct the “course” — the model — when new inputs arrive). Examples of “routes”: stories of different situations/civilizations, wars, discoveries, relationships, statistics of different strata in different contexts (e.g., expected number of breakdowns among assault/defense troops in a given situation with given ammo and inputs), etc.

Based on 1. The Law of “Simplification” and 17. The “80/20” Law there’s a limited number of “parameters” explaining “our ship’s route.” Additionally, from 14. The “People Don’t Change” Law it follows that if these “parameters” are closer to the “center,” they change much more slowly than the “external.” Finally, from 12. The “Titanic” Law it follows that “movement along the route” is highly inertial. What “center-close parameters” largely determine our “collective journey”?

It seems to me they are our values and meanings. That is, values and meanings, both declared and real, are indicators and beacons of our “movement toward various islands.” By “islands” I mean where we “end up” as a result of “our sailing”; we may linger on some, and avoid others if “coming closer” we see an “unpleasant island” — if we manage to “swerve,” per 12. The “Titanic” Law.

Examples of values or meanings (often the division is fuzzy):

- cost optimization, profit, ROI…

- money: dollars, euros, rubles; crypto…

- luxury: yachts, watches, brands, gold, diamonds…

- success:

- social reward: titles, certificates, followers, authorship of books/articles, co-authorship with famous people… tangible signs: car brand, address, a beautiful woman, a mistress…

- health

- avoidance: pain, shame, fears, humiliation…

- striving: to enjoy…

- resources: electricity, gas, oil…; food, water…

- being on trend, first

- being popular

- feeling part of something bigger

- owning “exclusive”: intellect, talent, clothing style, looks, diamonds, art…

- …

In today’s ocean journey we’ll meet only 2 types of islands: islands of “1+1=2” and islands of “1+1>2.”

V&M motion: Value–Meaning motion

Definition 1. The considered parameters that determine society’s development dynamics — “the movement of our ship from Fig. 1.”

“1+1=2” islands: events/things along our V&M-course already marked on the “charts” with a high probability of encountering them on the island.

A few examples I’ve found on “1+1=2” islands: drones, Tesla, Starlink, deep learning, geometric deep learning, Transformers, Large Language Models, ChatGPT 1–4, Google, etc.

“1+1>2” islands: unexpectedly encountered — non-obvious events/processes that radically change life aboard.

On “1+1>2” islands I’ve seen electricity, the steam engine, nuclear energy, the internet, blockchain, possibly the smartphone, etc.

A mini V&M cruise toward the “Power and Influence” islands

The value of power and influence is shared by many people on different continents. Often in practice, though not in words, these values outweigh the value of other human lives, etc. As long as this holds, there are wars and violence, resource grabs and values, not to mention psychological satisfaction from the process and result (read — I/we are cooler, stronger, smarter, richer, more democratic than everyone, etc.). The value of achieving goals with minimal resources is also widespread — meaning people will keep improving at it.

The above has held for a long time — it’s logical to assume we’ll continue to encounter along our V&M movement “1+1=2” islands with manifestations of the attributes above. On this V&M path among “1+1=2” islands we’ll find things/tools for rapidly neutralizing people/obstacles with minimal resources; new flying/floating/jumping/loitering/underwater, etc., devices for the same ends, in new media (space, Mars, under water, consciousness, soul, etc.). On these islands we’ll encounter tools to influence consciousness (it’s easier to neutralize a person by influencing their consciousness early so they’re controllable/friendly), biology/DNA, possibly wave/laser/bacterial/DNA-ethno modifiers, etc. We don’t yet have names for everything; some we do — combat drones, nuclear weapons, EW, Starlink, etc. But we know the probability of finding such tools is very high throughout this V&M journey.

Similarly we can undertake V&M journeys in other directions: AI, healthcare, finance, education, etc.

Example: a journey to “1+1=2” islands named Kandinsky 7.0 and ChatGPT 42.0

As in any voyage, we should prepare: study prior routes, ready our tools and provisions.

How new ML models work and what their boundary is — in simple terms

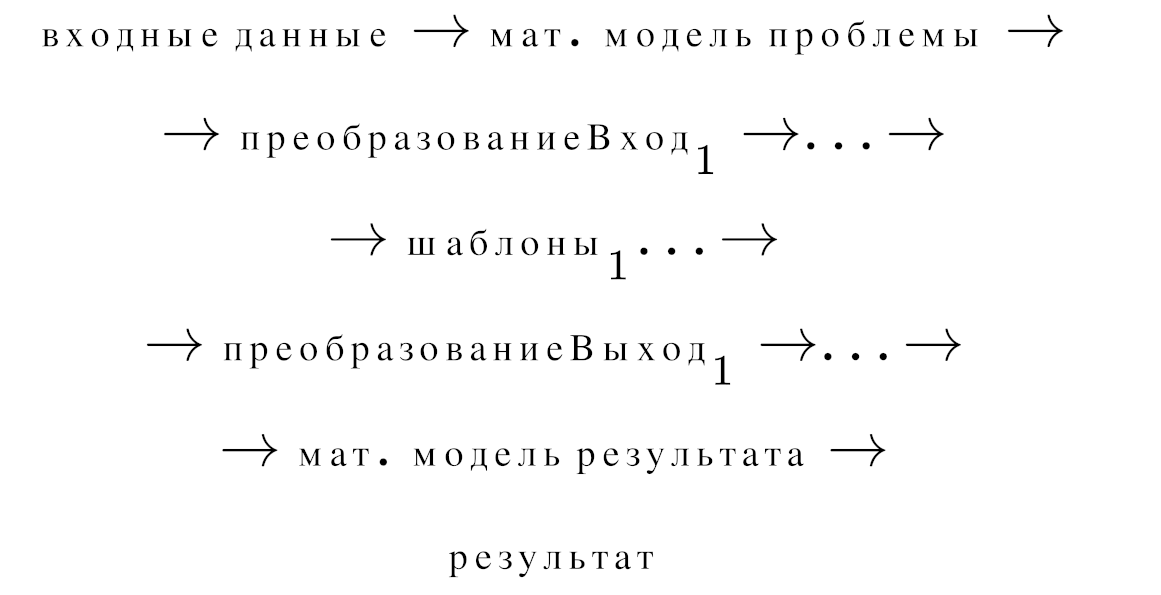

Almost any task (translation, face recognition, text generation, etc.) can be cast as a function/functional (input is a function, output a number; i.e., any function “maps” to a number), coded in numbers — i.e., mathematical modeling of the task.

Elementary functions in the bases of deep learning models and how they’re connected into a single structure let us encode template structures of the task (clear in computer vision tasks).

Approximation theorems serve as theoretical backing for using deep learning models for almost any task. Patterns and transform functions are expressed via model parameters:

Training modern models in general: finding patterns and mapping functions (transformations of patterns/intermediate data) that minimize training error within the chosen math model. For sequential data (text, time series, etc.), we can add “contextual” information (there’s a dictionary, and for each point we find relevant dictionary/pattern items as weights: big weight near a word ⇒ more relevant for that point/pattern) — commonly called transformers/attention, though I prefer “contextual information”; it’s what our brain does: it looks at “context” beyond direct meaning(s). Then we learn mapping from patterns + context to/from original data. Some mapping functions can be omitted — in this construction they’re constants. In short, that’s what we do now.

Note that because Values and Meanings are far fewer than their manifestations, and relatively static (they generally don’t change fast), the number of parameters our mental world model must track becomes simpler; processes are differentiated into important and less so; and they “slow down” proportionally to the rate of change of Values/Meanings.

Model boundary

We’re limited by patterns the model learned + transform functions. If a phenomenon is generated by a different “pattern,” we get a projection of it onto the manifold of variants expressible by the model. If our mapping function from “patterns” to the modeled phenomenon manifold is “poorly learned,” we get “hallucination” — an output not belonging to the phenomenon manifold we model/describe. Since context/meaning couplings are infinite (and hence pattern variations), by this modeling route we’ll never create artificial intelligence; there’ll always be an “uncovered 1%.” It’s akin to representing a signal via a “decaying” basis like Fourier: how many basis elements are needed to represent any function? All of them!

Developing artificial intelligence requires a fundamentally different modeling approach… ultimately of our brain. And do we need artificial intelligence, by the way? For me the answer isn’t obvious.

If you’re interested, for example Alexey Redozubov (@AlexeyR @habr) has explored alternative paths to AI.

Collective consciousness

Reality is “broader” than any language; moreover, language largely conditions our reality. Collective consciousness is the maximum of models based on our brain. Strong AI with increased compute may give us a new experience of living reality.

What V&M motion will the ship take: how will visiting “1+1=2” islands named Kandinsky 7.0 and ChatGPT 42.0 affect society?

Sailing this course will “bite off” ever greater parts of the templated nature of our collective consciousness, gradually materializing per the principle of least resistance (it’s mentally easier to replace than physically). Models will capture more patterns more strongly. In line with 2. The “Matrix/Oblomov-lite” Law people usually think fairly by-template, so most likely 17. The “80/20” Law will hold, repeated over the remaining “areas” — the earlier mentioned “uncovered 1%.”

As our ship moves on AI winds, we’ll accelerate: templated tasks will be done faster, new patterns discovered. Often the brake won’t be tech but not knowing what to do with “freed people”; until someone figures that out.

Note that unlike prior tech revolutions, this one differs radically in that we’re replacing, with models (and then, following V&M logic, with robots), our collective consciousness itself, and only afterward, as a consequence, other social phenomena (workplace interactions, text writing, etc.) — i.e., we automate the bedrock. Accordingly, any activity that can be templated/algorithmized we will automate. It follows we won’t be able to invent jobs for people if they aren’t creative (non-templated) and not “roughly” physical (until we learn to make bio-robots/robots).

Whether the discovered phenomena on the islands are named ChatGPT 42.0 or Kandinsky 7.0 isn’t crucial. What’s clear is they’re “1+1=2” islands and that such “ordinal versions” will have the properties above.

A notable case is a business consultant who coded business processes and decision-making in a large company, and it turned out 90% of top management wasn’t needed and was fired (90% of situations are standard and can be solved without the top).

Along the journey we’ll very likely visit a “1+1>2” island — a fundamentally different way to model…the brain.

Everyday examples of patterns becoming clearer and speeding us up: templating the work of Data Scientists/Engineers; templates in Miro, Notion, Evernote; presentations; business: 55 business-model patterns, etc. By and large, all books on being successful, making friends, presenting, etc., are about learning patterns in different spheres (mostly they reduce to the same inner patterns and experiences — a separate topic).

We usually self-identify, i.e., “stick” to our mental model; hence ads, propaganda, TV, sabotage ops, etc., are so effective — they rely mainly on meta-mental models. Pushing a person from calm into emotion (e.g., triggering fear, trauma, complexes, humiliation, aversion, pride, etc.) reduces awareness and thus activates their mental model, limiting critical thinking.

Critique and boundaries of this mental model

- it’s a very simple model that ignores many aspects: consciousness, collective conscious/unconscious, spiritual, etc.

- the model fully relies on a “positivist scientific view,” so the more we know, the more accurate the model; ideally we’d know “closed” information — then many crises and wars would belong to “1+1=2” rather than “1+1>2,” and we’d foresee islands well in advance.

- it’s desirable to use different mental models depending on task and context; professions that foster complex models: psychologist/psychiatrist, sociologist, intel/sabotage operations, marketer/brand specialist, confidence scammers/social engineering, philosophers/spiritual practices, investors (D. Soros, C. Munger, W. Buffett), political strategists (V. Surkov, A. Arestovich, Z. Brzezinski, etc.), and others (“Professor Moriarty”). In parentheses I listed people who, in my observation, likely use more advanced mental models — call them meta-mental models. There are many meta-mental models (an example presented by Felix Shmidel). Within such models one also operates other mental models when representing reality. Ideally, if you can influence society’s “reality” through collective consciousness, remaining in the shadows is most advantageous; I think many people with incredibly complex meta-mental models are unknown and known only to a very narrow circle. Note that now, to these people’s complex models + closed info + advanced modern brain knowledge and ways to influence collective consciousness, we add AI capabilities (Palantir & Co, etc.).

- note the hardest type: spiritual meta-mental models. Beyond them is the spiritual itself. From observation, such personalities include A. Arestovich, likely V. Surkov, V. Putin; G. Rasputin, G. Gurdjieff, Osho, J. Stalin, J. d’Arc (historical), etc. One difficulty in grasping their worldview is that their core meanings/values lie beyond the material, and in their usual uniqueness. Thus when most attach great importance to houses, yachts, money in their possession, for them it likely has very different meaning — they invest other symbols into them. And we mostly evaluate by ourselves, through our worldview — hence the difficulty.

- non-determinism: any mental model is a probabilistic model (even if the probability is almost 100%).

Why it’s important now to rethink why and how we interact

The drive for high margin leads to even a trip to a mistress turning into a military operation.F. Shmidel

In the realm of “doing,” especially templated doing, hardware will do things better than us; see Law 19. Templates can be non-trivial and very complex — and sooner or later new models will learn them (many templates will surprise most). Within our current mode of interaction we’ve come to where we can teach machines complex templates and mapping functions, with contextual information.

We should decide whether we continue our V&M motion under the same winds or…?

Will we see in “others” who are weaker/dumber/less far-sighted, who haven’t learned, or whose time hasn’t come to operate meta-mental models — only a source of goods/goal attainment, a “battery” for resources, a “bio-robot”? Or will we see the value of the other as a living being?

Simply because in this vast galaxy a miracle happened — and before us is a living being who appeared here for some reason, just like you, dear reader!

Note I’ve ignored (geo-)political conflicts, assuming we can negotiate.

In short:

- our socio-economic system is unbalanced and inevitably leads to stratification (within countries and globally), ultimately leading to segregation (likely classes: “elite,” “service” (physical, “spiritual,” intellectual), “security,” “bio-mass”);

- though it’s already cracking and it’s unclear what shape it takes in 2–10 years;

- AI and automation significantly accelerate the dynamics of wealth distribution;

- AI and automation are a revolution in the means of production, inevitably leading to social tension and conflict between classes;

- Elites are, in most cases, people like us first; we all worry about savings and living standards, children’s future, and act from our mental models and experience. Earning more than they have now makes no sense numerically for I. Musk, B. Gates, W. Buffett, R. Abramovich, J. Bezos, etc. Whether W. Buffett’s fortune is $10B more or less barely affects his real standard of living — not to mention you take nothing with you. Earning beyond a reasonable measure resembles:

I fight… simply because I fight!Porthos

The solution seems to me a movement toward a society where everyone can keep doing what they love (e.g., W. Buffett — investing) and maintain a “normal” standard of living, while the means of production — as a result of economic activity — gradually distribute more evenly by consensual agreement. This yields a gradual improvement in distribution.

As for being able to agree:

We can’t agree on anything; any tool or resource becomes a weapon.A. Kubyshkin

Any technology, philosophy, concept becomes a weapon for us: democracy, rumors, car/ship insurance, financial protocols, money, life resources, talents, the internet, history, good intentions — and in any medium: earth, air, water, under water, space, consciousness, soul…

What’s next

The main question: why and how do we interact with each other? in relationships, companies, cities, countries, Earth… how else can we interact? The answer, I think, lies in deciding what’s primary/secondary:

(a)

- Mutual aid (compassion and mercy)

- Competition

or

(b)

- Competition

- Mutual aid (compassion and mercy)

If (b), then follow nature and beasts. E.g., a lion pride capturing new territory after defeating old leaders first kills the little cubs — the offspring of former leaders —

it’s easier to eliminate competitors while they’re weak — ideally in the embryo or before birth.

Accordingly, it’s most profitable to compete by removing competitors’ children — kill them, make unfavorable conditions for development, fill them with harmful products, etc. Rather than blocking Ukrainian products’ roads, better neutralize all Ukrainian/African children. And treat other people’s children likewise, teach them skills that impede adaptation and development. Better to develop DNA weapons to render women of a certain ethnicity/group infertile; then you won’t need to fight grown warriors. May benefit be with us, for isn’t that what matters most?

If (a), the vector is improving quality of life (many dimensions) for all participants — increasing good. The correctness criterion — that “it didn’t get worse than it was.”

And if I’m told to hold my tongue,

I’ll still keep saying what I know:

What lies ahead — God knows,

But what is mine is mine!

I. A. Krylov

It may sound less pretty/ambitious, but in this formula there’s also comparison with what was — take the best of the past, learn from errors and experience, and look ahead cautiously, moving gradually.

Making things “fair”/“equal,” etc., is utopia.

It’s also utopia if someone says I know what’s best for all — the ship’s control system has become too complex. We should aim at this vector and continuous feedback from passengers.

Note that with such movement, passengers are inevitably forced to turn inward to understand what they need and whether it hasn’t gotten worse, which inevitably leads to rethinking consumer culture and other values. With this vector we don’t need strong AI — an argument some make (e.g., for resource allocation); though there’s no conceptual contradiction either.

In future articles I’ll discuss our options and why thinking in terms of capitalism and/or socialism and/or … another …-ism is flawed at the root.

May benefit be with you, for isn’t that what matters most?

I’m afraid of becoming like adults who care about nothing but numbers...The Little Prince, A. de Saint-Exupéry

Examples of how I process information: materials with key theses and my comments

1. Alexander Kubyshkin, “AI, the World Economy, What to Expect” (ENG), key theses:

- energy — the basis

- everything in this world — economic (mental, spiritual, cultural) weapon; impossible to agree on anything // political weapon

- 6th tech wave

- 1–5 waves (each transition uses ~2× more energy)

- new technologies — lots of energy

- new tech: closed energy production loop // untested

- don’t believe in “green transition” (example of a giant space station with ideal batteries/conditions), little space + scarce battery resources → look at the “BN” (bottleneck) = China

- crypto/blockchain + AI

- we won’t live in a globalized world!!!

- supply chains will localize

- AI will help with that; no global world

- technology moves faster than society adapts

- no incentives to take risks in management

- personal data via blockchain

- ban decentralization tech

- drift toward “China’s” model

- shift in (capitalist) paradigm

- growth impossible if resources are limited

- no expansion possible — space; Musk tries; after Chernobyl nuclear tech was frozen for 25 years → conflicts will happen

- 40% of NASDAQ are “lalaland” companies — unclear what/how/whom they sell and whether they’ll deliver

- if you total costs (environment, mining, etc.) — the cleanest energy is nuclear

- rising risks, instability, need quick reaction + feedback

- the only loser — Europe:

- no energy

- governance is ineffective (politicians as clowns — incapable)

- transition to a new economy is impossible without war: question is how big?

- US/China — two models of organizing societies

- China’s model amid turbulence may be adopted by others

- Japan — prediction (demographics): robots will be “part” of society

- conflict in turbulence not necessarily between two states but many

- Crimean wars of the 1900s let Britain retain dominance another 100 years

- Schwab’s idea of inclusive capitalism: elites are “good,” others are slaves

- Book Tom Burgis “Kleptopia: How Dirty Money Is Conquering the World”

- top corruption — money in the West — via banking system control money and elites — states (e.g., Kazakhstan)

- the financial bubble must pop (40 years) after which building can start

- money

- tax — a mechanism for the state to incentivize behavior

- created not by the state but by banks; less than 1% is “money,” 95% “money” — private banking sector (I think this is understated) no one can define what money is (joke: in the US they can’t define what a woman is) banks have methods of printing/creating money and thus creating financial statements across the system: central bank, banks, non-bank financial institutions: 450–470T USD; global economy: 90T (financial assets in GDP ×6) classic banks: 200T; shadow “banks” (hedge funds, insurance…): 250T; central banks: 40T; we don’t know how the system works, what counts as money…

- 50% of all transfers — dollars; 95% of derivatives — dollars

- 2% — non-dollars

- only 50% of apartments in NY are occupied

YouTube channel Alexander Kubyshkin

2. Economist on AI & Society, key theses:

- skeptical — current tech isn’t “there” yet

- the risk isn’t human extinction, but bias with discriminatory social effects, privacy and IP, etc.

3. Sam Altman | Lex Fridman #367 (ENG), key theses:

- OpenAI — huge advances in AI

- but note AI risks (Lex) — we’re on the cusp of change (in our lifetime)

- inspiring: many known apps, many unknown; will help end poverty and bring us closer to happiness we all seek !!! on what is this based? !!! danger we may be destroyed — Orwell/Huxley 1984… stop thinking, etc.

- need a balance between AGI’s possibilities and danger

- 2015 → declared goal of working on strong AI, many in the field mocked; says they had poor branding

- Lex: DeepMind & OpenAI were small groups unafraid to declare the goal to create AGI

- Sam: now no one laughs (before they mocked/criticized the AGI goal)

- this is about power, the psychology of AI creators, etc.

- GPT-4 — why and what

- Sam: a system we’ll look back on as the first AI — slow, buggy — but it will be important, like computers

- Sam: progress is exponential; no “point” to mark

- Lex: too much! the problem is filtering data

- Sam: many pieces to assemble into a pipeline (new ideas or good implementation)

- Lex: quotes “…chatgpt learns something….”

- Sam: most important: “how useful is it for people?”

- Lex: not sure we’ll ever understand these models; they compress the internet/texts into parameters

- Sam: by some definitions of intelligence/reasoning — chatgpt can do it at some level — somehow incredible

- Lex: many will agree, especially after chatting with it

- Sam: agree re ideas

- Lex: Peterson asked if Jordan Peterson is a fascist:

- Sam: “what people ask says a lot about them” Peterson asked about token length Concluded chatgpt lies and knows it lies.. can’t count length

- Sam: I hope these models bring nuance back !!! what’s that hope based on? !!!

- Sam: progress has exponential nature.. impossible to point to a “leap”

- Sam: we RLHF models; otherwise less useful

- Sam: some trivial for us — not for model (counting characters, etc.)

- Sam: we release early because we believe if THIS will shape the future, the collective feedback finds good/bad behaviors

- we could never find things inside companies without collective intelligence iterative improvements

- GPT-4 much better, less biased than 3.5 .. wasn’t especially proud of 3.5 biases !!! what role do Ego vs “making the world better” play? !!!

- Sam: there will never be 2 people agreeing on quality; over time give personalized control

- Sam: I dreamed of building AI and didn’t think I’d get a chance

- I couldn’t imagine that after the first “primitive” iteration I’d have to spend time addressing nitpicks like “how many characters in answers about two people” “You give people AGI and THIS is what they do?!” !!! you make something powerful & accessible and don’t know what people will do with it.. maybe decide what/how first? !!! he admits these nitpicks are important !!! contradiction? !!!

- Lex: how much on safety?

- Sam: we spend a lot on alignment (safety, bias, “all the good against all the bad”).. proud of GPT-4; lots of testing (!!! no numbers !!!)

- Lex: share alignment experience

- Sam: we haven’t found a way to align super-powerful systems

- people don’t grasp nuance; alignment work resembles all other work we do (DALL-E)

- Sam: we’ll need to agree on model boundaries (good/bad..)

- users get control via prompts (“answer like xxx..”) prompt engineering as UX

- Lex: since it’s trained on “our” data, we can learn about ourselves

- with experience the system responds smarter; feels like a person

- Lex: LLMs/ChatGPT will change the nature of programming

- Sam: already happening; the biggest short-term change (helps do work/creative better and better) !!! better or just faster? long-term creativity? skill atrophies? !!!

- Lex/Sam: ask for code, then iteratively improve via “dialogue”.. new debugging?

- Sam: a creative dialogue partner.. that’s huge! !!! why always faster/more efficient? what about our kids’ skills? !!!

- Debating what is “hate speech,” etc., what models should do with it

- example of a Constitution as social contract.. democratically !!! is democracy the right tool here? !!!

- Lex: how bad is the base model — Sam dodged !!! openness includes unpleasant things !!!

- Sam: we’re open to criticism.. not sure we grasp how it affects us.. not sure

- Sam: I don’t like when a computer “teaches” me.. important I “control” the process and can “throw the computer out” !!! pressing the human control fear button? !!!

- I often say in the office: treat people as adults !!! what does “adult” mean? !!!

- Sam: the gap between 3.5 and 4 is large; we’re good at many small technical improvements that add up to 4

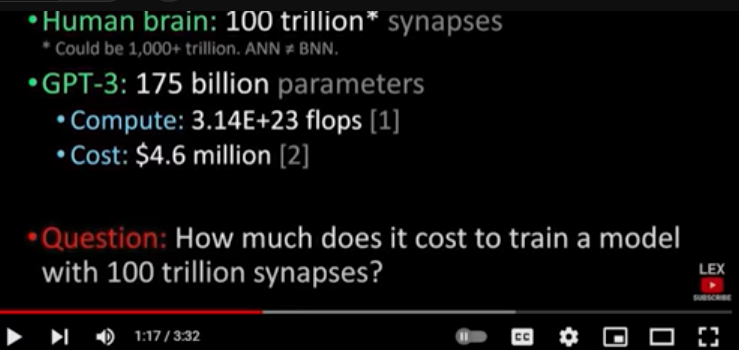

Training cost and number of parameters (brain connections)

- Lex/Sam: compare brain vs neural nets — impressive

- discourse gets ripped out of context

- Sam: hardest thing humans have done; later trivial and many will repeat

- Lex: model trained on all human data; compression of all humanity

- Lex: how many parameters?

- Sam: got trapped in parameter-count race; at OpenAI we focus on results, not “beauty” !!! how many params? answer: we focus on working models!!!

- Lex: Noam Chomsky — a major critic that LLMs can reach AGI

- do you think LLMs are the path to AGI (consciousness)? Sam: part of the “equation,” but other crucial things needed; extending the ChatGPT paradigm with ideas we don’t yet know; if the “Oracle” told me ChatGPT-10 is AGI, I wouldn’t be shocked; maybe we never build AGI, but make people “super great” !!! at what? !!! and that’s huge !!! maybe recall what it means to be Human first? !!!

- Sam: a system that can’t add radically new scientific knowledge isn’t “superintelligence” !!! I read: “I don’t know what strong intelligence is, but I want to build it and believe it makes life better… and I’m also building a bunker…” !!!

- Sam: I’m inspired by a world where ChatGPT extends a person’s “will” !!! then why a bunker? !!!

- Lex: I like programming with AI

- fear AI will take programmers’ jobs; if it takes yours, you weren’t a good programmer — only partly; there’s a creative part !!! logically off: if 80% of the job is standard, it’s cheaper to do with AI regardless of your level !!! Sam: many are inspired — 10× productivity; few turn it off !!! Law 24: “Cinema stairs” — eventually if you don’t use it, you lose the “race”; hence the “choice” to turn it off is taken away !!!

- Sam: Kasparov said chess is dead after losing to a computer, yet chess has never been more popular;

- we don’t watch AIs play each other !!! are we enjoying it more? or did “surprise” give way to dry calculation? !!!

- Lex: AI will be better blah blah, but we’ll need emotions/imperfectness…

- Sam: the uplift to living standards is incredible; we’ll do incredible things !!! based on what? are Japan/Korea/Switzerland/Scandinavia (higher suicide, aging) happier than Jamaica/Bali? !!! people like being useful, drama.. we’ll find a way to provide it !!! so AI makes them useless?! maybe let people decide… !!!

- Lex: Eliezer Yudkowsky warns that AGI may destroy humanity

- core: you can’t control superintelligence Sam: he may be right we must focus on the problem approach: iterative improvement/early feedback/one-shot limits Eliezer explained why AI alignment is hard; Sam sees logical errors (unnamed), but overall he thought it through

- Lex: will AGI appear fast or gradually? daily life change?

- Sam: (Lex agrees) better for the world if slow, gradual

- I fear rapid AGI development

- Sam: is GPT-4 AGI?

- Lex: like UFO videos, we’d know instantly. Some capabilities not accessible

- Sam: GPT-4 isn’t AGI !!! dodged on hidden capabilities !!!

- Lex: does GPT-4 have consciousness?

- Sam: I think not; do you?

- Lex: it can pretend

- Lex: I believe AI will have consciousness; how? suffering, memory, communication

- Sam: quoting Ilya Sutskever: if “consciousness” isn’t in training data but the model can answer that “it understands what consciousness feels like,” then… (it has consciousness)

- Lex: consciousness is experiencing life (Sam: not necessarily emotions); in the film, a robot smiles to itself (experience for experience’s sake) — a sign of consciousness.. claims emotions may play a leading role

- They can’t say anything definitive about consciousness

- Lex: reasons things might go wrong en route to AGI? you’ve said you’re inspired and a bit afraid..

- Sam: yes, strange to feel no fear

- I sympathize with those very afraid !!! “we’re on the same side” — communication technique? !!! didn’t answer !!!

- Lex: will you recognize the moment the system becomes super-smart?

- Sam: my current worries:

- lying/disinformation problems, economic shocks/crises other things far beyond what we’re ready for the above doesn’t require AGI; few pay attention… even before AGI !!! he didn’t answer but said there are earlier problems !!!

- Lex: so even scaling current models may change geopolitics?

- Sam: how do we know LLMs aren’t steering flows/discourse on Twitter? etc.?

- we can’t know! and that’s dangerous… maybe regulation, stronger AI !!! we built a powerful disinformation generator and can’t control consequences — given the huge role of social networks — revolutions have been made through them; so much for “making the world better” !!!

- Lex: how do you handle market pressure (Google, etc.), open-source…? priorities?

- Sam: I stick to my belief and mission !!! source of mission? !!!

- there will be many strong AIs; we’ll offer one; diversity is good at first people laughed but we were brave to declare AGI… !!! ego vs helping others? !!!

- Sam: OpenAI has a complex structure… non-profit control, but pure non-profit didn’t work.. needed some “perks of capitalism”

- I think no one wants to destroy the world, so capitalism etc. → “better angels” and good companies win in the end !!! logical error/dangerous assumption !!! we collectively discuss and try to mitigate terrible risks

- Lex: no one wants to destroy the world !!! unfounded

- Lex: two guys in a room may say: “Holy crap!!”

- Sam: that happens more often than you think

- Lex: this can make you the most powerful; worry power corrupts?

- Sam: of course! decisions on AI use should be more and more democratized, but we don’t yet know how. One reason: give time to adapt, reflect, adjust laws.

- Sam: very bad if one person has access; I do NOT want privileges on the board !!! what happened at your board? !!!

- Lex: creating AGI gives immense power

- Sam: (asks questions) do you think we’re doing well? what improve? On Lex’s note on openness, asked if to open GPT-4. Lex said no; he trusts many at OpenAI.

- Sam: Google likely wouldn’t open an API; we did; we may not be as open as many want, but we do a lot.

- Lex agrees: they fear risks more about access than PR

- Sam: people here feel responsibility !!! to whom? !!! open to ideas; gets feedback from talks like this !!! are the most important questions discussable like this? !!!

- Lex: where do you agree with Elon Musk on AGI?

- Sam: we align on risks/dangers and that with AGI life should be better than without !!! how? !!!!!! Sam often answers with counter-questions on awkward remarks !!!

- Lex: agree/disagree with Elon?

- Sam: agree on potential risks

- agree that with AGI life should be better for Earth than if AGI never existed Elon attacks us on Twitter; I empathize — he cares about AGI safety !!! again the “empathy” technique !!! I’m sure there are other motives !!! hinting at some game? !!! saw an old SpaceX video where many criticized; Elon said they were his heroes and it hurt; wanted people to see they were trying hard. Elon was Sam’s hero, even if he’s an a** on Twitter !!! strong judgment !!! I’m glad he exists, but I’d like him to notice our hard work to do it right !!! good communication technique

- Lex: more love! What do you value in Elon in the context of love/acceptance?

- Sam: he moved the world forward

- electric transport, space; though he’s an a** on Twitter !!! 2nd time !!!, he’s a fun and warm guy

- Lex: I like diversity (opinions, people..) and on Twitter there’s a real battle of ideas…

- Sam: maybe I should reply… but not my style… (maybe someday)

- Lex: you’re both good people deeply concerned about AGI and with great hope/faith in it !!! maybe be concerned about people, not tech? !!!

- Lex: quoting Elon, chatGPT seems too WOKE (too tolerant, LGBT+, gender, etc.)? bias?

- Sam: don’t know what WOKE means anymore !!! doubt !!!, early GPTs were too biased; there’ll never be a version the world agrees is unbiased !!! handy to avoid awkward bias questions !!!

- we’ll try to make the base model more neutral

- Lex: bias from employees?

- Sam: 100% there’s such bias; we try to avoid the “SF cognitive bubble”; I’m going on a world tour to talk with diverse people (did this at YC) !!! he tries to use meta-mental models, understand others !!!

- think we avoid the “crazy SF bubble” better than others, but we’re still deep in it !!! admitting imperfection — wins trust !!!

- Lex: distinguish model bias from staff bias?

- Sam: I worry most about bias from people who fine-tune after! we understand that part the least.

- ->This is the central and most important point in the interview and topic overall — solving problems with any tool (AI or else): we don’t know how to understand and listen to each other; without solving this, no AI or AGI will help us agree, we’ll keep fighting — now with AI and its derivatives.

=====================================

I’ll stop here because:

a. I don’t see sense to keep listing if the “basis” is clear and lacks focus;

b. it’s energy-consuming and I’m unsure anyone cares and/or finds it useful.

General comments/questions:

- You give people a most powerful tool at the current level of development/consciousness (values, meanings..), so they’ll pursue the same needs/meanings as before — but with a new tool… processes will accelerate: are you sure we’re not on the “Titanic” to speed up the ship?

- You can’t calculate consequences within one company; why presume to think/do for others?

- What roles do your Ego and ambition play?

- Is it true you built/will build a bunker (like Zuckerberg & co)? If yes, why? If we’re not on the “Titanic,” why a bunker? If we are, why speed up?

- Why does the company’s name not match its content: why not open code? how do you train? what data? wasn’t the original goal to make OPEN AI for all humanity? then open source?

- These models are trained on humanity’s labor (texts, translations, designs, art, video (already in the new model), games, code..): why does someone get to appropriate the model results? They’d be impossible without collective labor: shouldn’t they be open source?

- Universal Basic Income: a big mistake — it ignores human nature → much degradation

4. Sam Altman | Lex Fridman #419 (ENG), key theses and comparison with the first interview:

I don’t see sense in analyzing the second interview here and comparing it — unclear if anyone needs it; if yes, I’ll watch/analyze/compare later.

I haven’t watched it — unlikely to be new.

Man is made so that he looks at happiness unwillingly and distrustfully, so that happiness must even be forced upon him.M. E. Saltykov-Shchedrin

💬 Community | Engage: Ω (Omega) Telegram Чат | Community

You can schedule a meeting via Calendly, или через Read.ai, написать мне Email или в Telegram.